VALORANT Systems Health Series - Voice and Chat Toxicity

This article is a part of a series of deep dives on topics in the Competitive/Social & Player Dynamics space of VALORANT. Learn more about the series here.

Hi again! Sara and Brian, members of the Social and Player Dynamics team on VALORANT, back with another update to chat about some incoming work on our pipeline. This is a tough one, so let’s jump right into it. Today, we’re going to talk about comms abuse in VALORANT and our strategy on tackling it.

INTRODUCTION - THE WAY INTO THE PROBLEM

Comms toxicity covers what we consider unwanted behavior over in-game voice and chat communication. While we can never remove the bad conduct itself from individuals, we can work to deter behavior such as insults, threats, harassment, or offensive language through our game systems. There’s also room to encourage “pro-social” behavior.

As we’ve said before, we’re dedicated to being a strong force in paving the way for a brighter future for all when it comes to comms-related toxicity in gaming. There’s been what we believe to be healthy progress in this area, however, we can see there is more to do.

While there is no silver bullet, we want to walk you through some of the steps we’ve taken (and the measured results), as well as the additional steps we’re planning to take to improve the chat and voice experience in VALORANT.

WHAT HAVE WE DONE SO FAR?

First, let’s talk about the work we’ve done up to this point. When it comes to unwanted communication in our game, there are two primary forms of detection that we rely on.

Player Feedback

The first form of detection is player feedback—specifically reports! One of the reasons we highly encourage all of you to report bad behavior (whether that be someone abusing chat, or being AFK, or purposely throwing), is because we actively track and use that data to administer punishments.

To be clear, this doesn’t mean that anyone who gets reported will get punished—we look for repeat offenders and administer escalating penalties that start from warnings and go all the way to permanent bans (when bad enough).

We’ve talked before about creating player behavior “ratings” when we talked about AFKs in VALORANT. Similarly, we’ve created an internal “comms rating” for each player in VALORANT, so that those who commit repeat offenses are very quickly dealt with.

On the other hand, there are some things that people type and say in our game that are what we consider “zero tolerance” offenses. These are things that make it clear that, based on what’s been said, we simply do not want a player who has committed this offense in VALORANT. When we see reports of this type of language being used, we escalate to harsh punishments immediately. (Currently, these punishments happen after the match has ended—more on that later.)

Muted Words List

As I am sure some of you have experienced, automatic text detection isn’t perfect (players have found pretty “creative” ways to bypass our systems), so there will be times where “zero tolerance” words will get by our detection. For this reason, we recently implemented a “Muted Words List” that allows you to manually filter out words and phrases that you don’t want to see in the game.

This serves two functions:

First, knowing that our automatic detection systems aren’t perfect, we want to give some agency to you to filter unwanted communication in the game. Second, we plan on incorporating the words that you filtered using the Muted Words List into our automatic detection in future iterations of the system (so in a way, using the Muted Words List will improve our automatic detections in the future!).

WHAT WERE THE RESULTS?

So far, we’ve explained to you some of the things we’ve done to detect, punish, and mitigate toxicity in VALORANT. This is only the tip of the iceberg and we have a long journey ahead of us, but let’s take a look at some of the results of our actions.

Chat Mutes

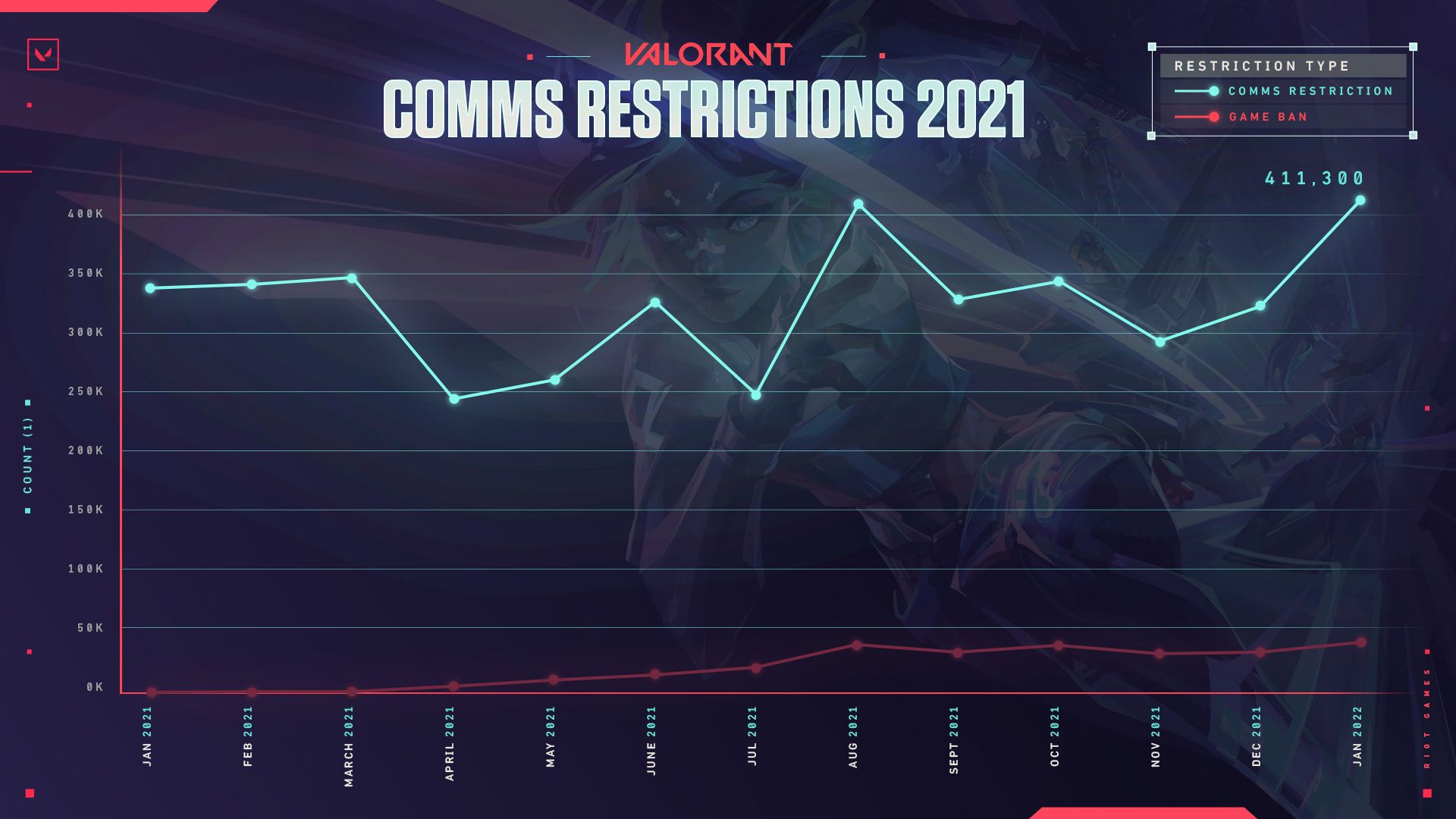

First are chat mutes, the most common form of initial punishment that we administer for alarming text or voice comms. In January alone, we administered over 400,000 text and voice chat restrictions in the form of a mute. These are automatically triggered when a player types something in chat that we detect as abusive, or when enough reports have accumulated over time (from different players in different games) such that we have strong confidence that a player is abusing text and/or voice.

The road doesn’t end here! Please note that we are working on improving this model so we can confidently detect more words that may be currently dodging the system. We’ll continue to push for improvements on this front.

Game Restrictions

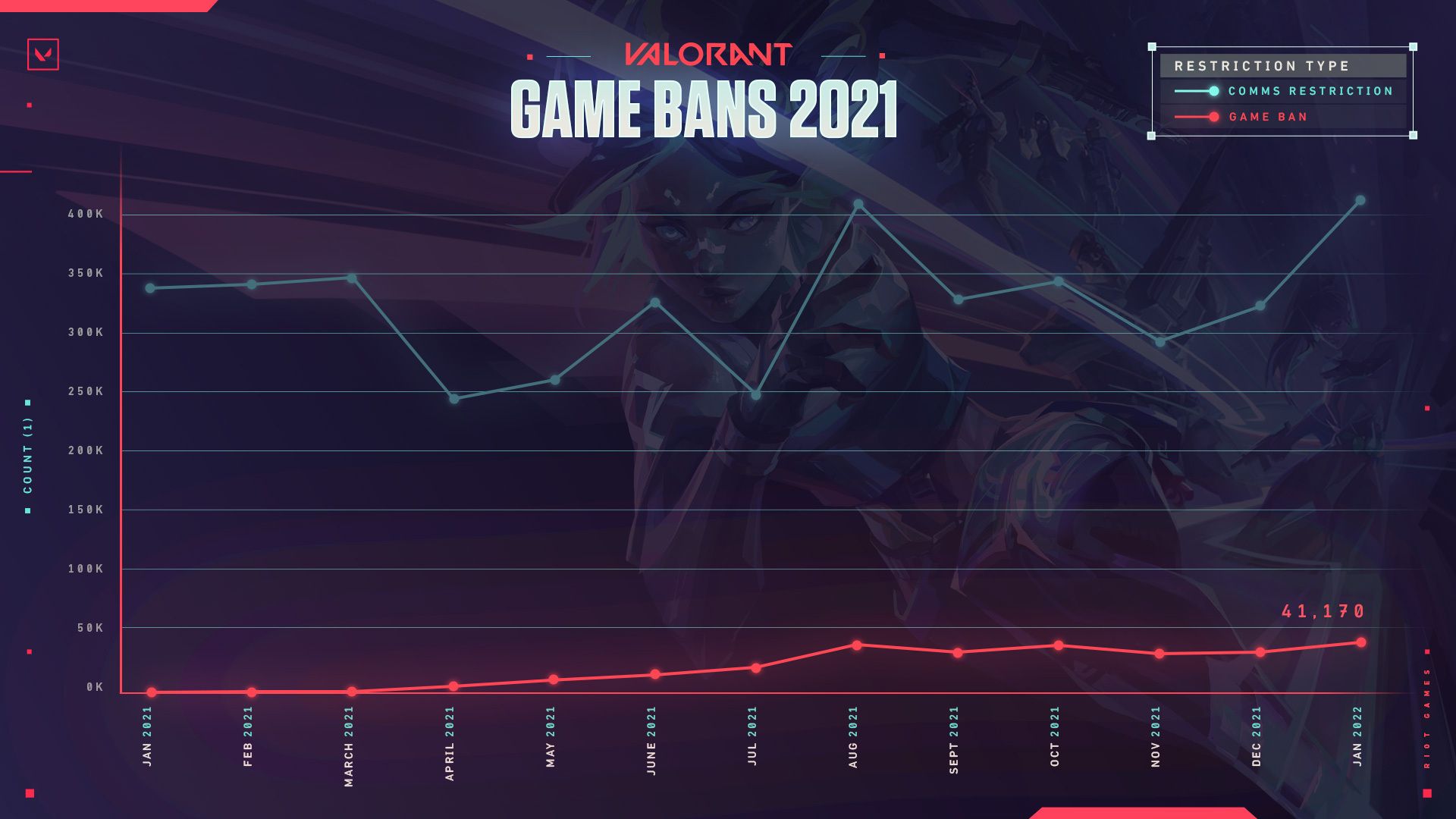

Next up are game restrictions. These are bans that we place on accounts that commit numerous, repeated instances of toxic communication. The bans could range from a few days (for smaller violations by relatively new offenders), to year-long ( for chronic offenders). Permanent bans are reserved for if the behavior is especially egregious and/or repeated.

These bans didn’t ramp up on VALORANT until around the middle of last year (we were trying to make sure bans were fully justified). In January, we dished out over 40,000 bans.

One last point of data we’d like to share: the above numbers are indicators of behaviors that we caught and punished, but not necessarily an indication that toxicity in VALORANT has gone down as a result. In fact, when we surveyed players, we noticed that the frequency with which players encounter harassment in our game hasn’t meaningfully gone down. Long story short, we know that the work we’ve done up to now is, at best, foundational, and there’s a ton more to build on top of it in 2022 and beyond.

We’re trying different ways to engage with the player base in the coming year to better understand where we can improve, and we’ll be sure to be transparent about where we are in terms of the tech we are building and where our head’s at/heading so we can keep the feedback loop open with you all.

WHAT WORK IS PLANNED?

To be sure, we know there is more to do. So we now want to share a bit more about what we’re currently working on:

- Generally harsher punishments for existing systems: For some of the existing systems today to detect and moderate toxicity, we’ve spent some time at a more “conservative” level while we gathered data (to make sure we weren’t detecting incorrectly). We feel a lot more confident in these detections, so we’ll begin to gradually increase the severity and escalation of these penalties. It should result in quicker treatment of bad actors.

- More immediate, real-time text moderation: While we currently have automatic detection of “zero tolerance” words when typed in chat, the resulting punishments don’t occur until after a game has finished. We’re looking into ways to administer punishments immediately after they happen.

- Improvements to existing voice moderation: Currently, we rely on repeated player reports on an offender to determine whether voice chat abuse has occurred. Voice chat abuse is significantly harder to detect compared to text (and often involves a more manual process), but we’ve been taking incremental steps to make improvements. Instead of keeping everything under wraps until we feel like voice moderation is “perfect” (which it will never be), we’ll post regular updates on the changes and improvements we make to the system. Keep an eye out for the next update on this around the middle of this year.

- Regional Test Pilot Program: Our Turkish team recently rolled out a local pilot program to try and better combat toxicity in their region. The long and short of it is to create a reporting line with Player Support agents—who will oversee incoming reports strictly dedicated to player behavior—and take action based on established guidelines. Consider this very beta, but if it shows enough promise, a version of it could potentially spread to other regions.

Lastly, we talked about this when we discussed AFKs, but we’re committing to communicating both the changes we make, as well as the results of those changes, in a more regular cadence. It helps us stay accountable, and hopefully helps you stay informed!

EXTRA WORD ON VOICE

When a player experiences toxicity, especially in voice comms, we know how incredibly frustrating it is and how helpless it makes us feel both during the game, as well as post game. Not only does it undermine all the good in VALORANT, it can have lasting damage to our players and community overall. Deterring and punishing toxic behavior in voice is a combined effort that includes Riot as a whole, and we are very much invested on making this a more enjoyable experience for everyone.

Voice Evaluation

Last year Riot updated its Privacy Notice and Terms of Service to allow us to record and evaluate voice comms when a report for disruptive behavior is submitted, starting with VALORANT. As a complement to our ongoing game systems, we also need clear evidence to verify violations of behavioral policies before we can take action, as well as help us share with players on why a particular behavior may have resulted in a penalty.

As of now, we are targeting a beta launch of the voice evaluation system in North America/English-only later this year to start, then we will move into a more global solution once we feel like we’ve got the tech in a good place to broaden those horizons. Please note that this will be an initial attempt at piloting a new idea leveraging brand new tech that is being developed, so the feature may take some time to bake and become an effective tool to use in our arsenal. We’ll update you with concrete plans about how it’ll work well before we start collecting voice data in any form.

HOW YOU CAN HELP!

The only thing we’d ask is that you stay engaged with the systems we have in place. Please continue to report toxic behavior in the game; please utilize the Muted Words List if you encounter things you don’t want to see; and please continue to leave us feedback about your experiences in-game and what you’d like to see. By doing that, you’re helping us make VALORANT a safer place to play, and for that, we’re grateful.

We’ll see you in the next update! In the meantime, feel free to send any feedback or questions to members of the S&PD Team: